According to Gartner®, By 2025, 40% of advanced Virtual Assistants will be skilled, domain-specific VxAs based on customized language models, up from less than 5% in 2021.

For IOT to reach the explosive growth goals predicted, the interfaces we use to interact with “things” must be natural. Solutions are entering the mainstream like hand gestures, heads-up displays, eye movement, and augmented reality glasses. Hopefully someday we can get rid of the keyboard entirely.

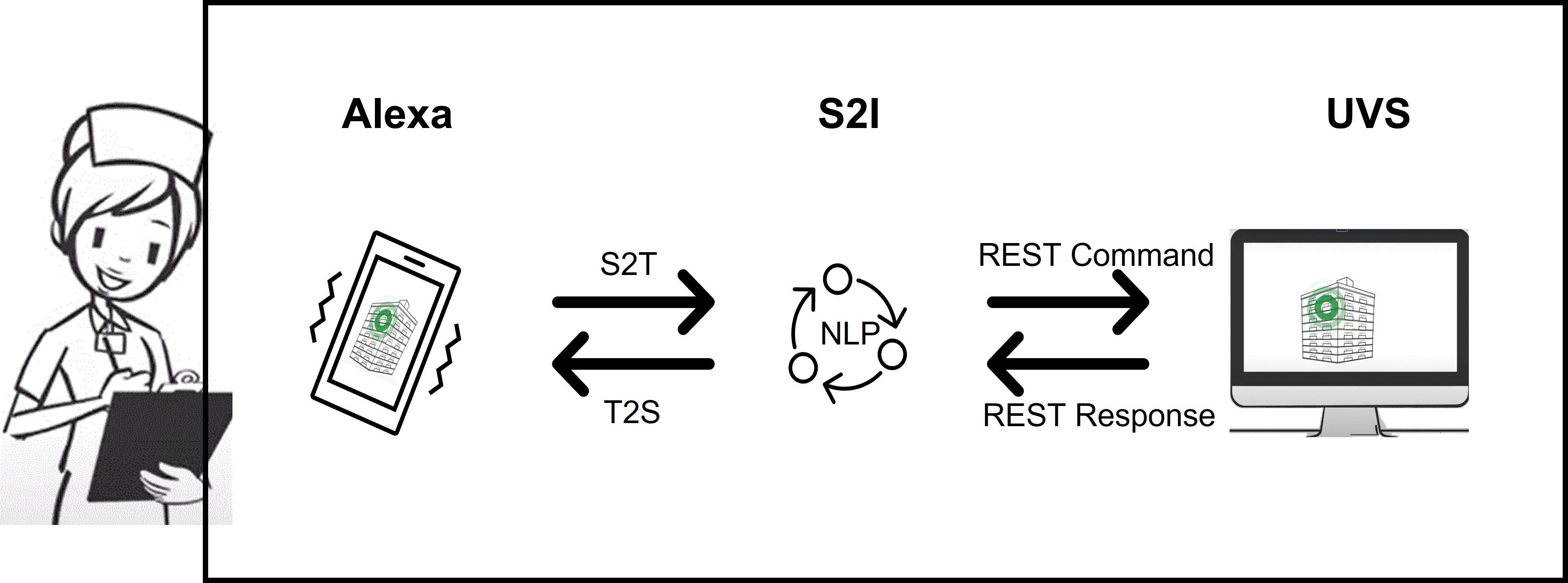

AiRISTA and our ecosystem partner Speak to IOT (S2I) have created an integration that uses a voice based Virtual Assistant for the Unified Vision Solution (UVS) platform. Clinicians using healthcare RTLS solutions are now able to speak into their smart phone to interact with the RTLS platform. The demo uses several commands most useful for busy staff.

- How many wheelchairs are on the floor?

- Where is the nearest wheelchair?

- Where is the bariatric wheelchair?

In each case, the phone provides a voice response and a floorplan served up from UVS with the location of the requested resource using S2I’s natural language processing engine (NLP).

The following 2 minute video demonstrates our Virtual Assistant (VA) integration for UVS.

{% video_player “embed_player” overrideable=False, type=’scriptV4′, hide_playlist=True, viral_sharing=False, embed_button=False, autoplay=False, hidden_controls=False, loop=False, muted=False, full_width=False, width=’1000′, height=’562′, player_id=’72952058780′, style=” %}

How does this work?

We chose to use Alexa as the voice front end, although any voice front end will work. Alexa translates the spoke phrase to a text string. We begin by saying “Alexa” which is the wake word recognized by Alexa to begin capturing speech. The next spoken phrase, “Ask My Sofia”, is the invocation name used by S2I to invoke the specific NLP engine, skill, used for location interactions.

At this point, S2I waits for user voice commands. It receives a command, or utterance, “how many wheelchairs are on floor two?” Using NLP algorithms, the skill translates the user command into a corresponding query to UVS via a REST API. UVS returns the number of wheelchairs to the S2I skill which structures a text phrase as the response to the user. The text is sent to Alexa which translates the text to natural sounding speech.

Virtual Assistants are also being used by equipment vendors for a hands-free experience. For example, Thermo Fisher provides an Alexa VA interface for customers of their lab equipment. “AVS will fundamentally change the customer experience for scientists in dramatic ways. It will impact everything from operating a lab device hands-free to overall lab planning and beyond,” says Sean Baumann, vice president of information technology applications and connectivity at Thermo Fisher.

According to Gartner, most uses of VAs today are in banking, retail, communications and insurance, driven in part by COVID and the need to stay safe while reducing costs. However, future growth will be driven by professional, specialized VAs capable of performing skilled, domain-specific tasks. “This opportunity is driven by demand for VAs capable of high levels of task assistance, as well as less competition due to the emerging nature of vendor capabilities. These use cases are typically offered by more innovative companies. Gartner expects VA capability advancements to enable more specialization and task assistance in both the near and long terms. The various types of VxAs and associated opportunities are outlined below.”[1]

[1] Gartner®, Emerging Technologies: Current and Emerging Use-Case Opportunity Patterns in Virtual Assistant Adoption”, Danielle Casey, October 28, 2021