If your IT organization hasn’t started using broker based data streaming architectures, it soon will.

As data storage costs come down thanks to unstructured repositories (e.g. data lakes) and more data sources to generate streams of data (e.g. Clickstream data, IoT devices), IT projects will be ill prepared for the future if they continue traditional batch processing models. Unlike batch models, streaming architectures consume data immediately, persist it storage, and provide real time tools like machine learning, AI, data manipulation and analytics. Gregg Pessin of Gartner warns, “CIOs lacking an IoT platform strategy run the risk of perpetuating a disjointed, costly and overly complex environment that will impede digital innovation. The IoT platform is a member of the larger collection of technology platforms supporting the HDO that combine to enable the digitalization of administration, clinical operations and care delivery”.[1]

Why Streaming Platforms

An architecture that manages streams of data using brokers to inspect/manipulate data is able to scale, handle massive volumes of data and integrate with disparate systems. Benefits include;

- Eliminate the need for large data engineering projects

- Built-in performance, high availability and fault tolerance

- Cloud delivery accelerates schedules

- Creates a common platform for multiple sue cases

As streaming architectures evolve, they will become even more popular. Trends driving this shift include;

- A shift from data warehouse storage to data lakes as data volumes increase and storage costs come down. As “data in motion” is decoupled from compute, streaming date is available to a range of data consumers simplifying integration.

- Shift from traditional structured, table driven data to unstructured data. This data stored in NoSQL “Big Data” repositories is available to iterative, interactive processes that have no predetermined sense of data structure.

- Broker based streaming architectures free engineering resources from data plumbing allowing them to focus on higher value activities.

What is a Streaming Platform

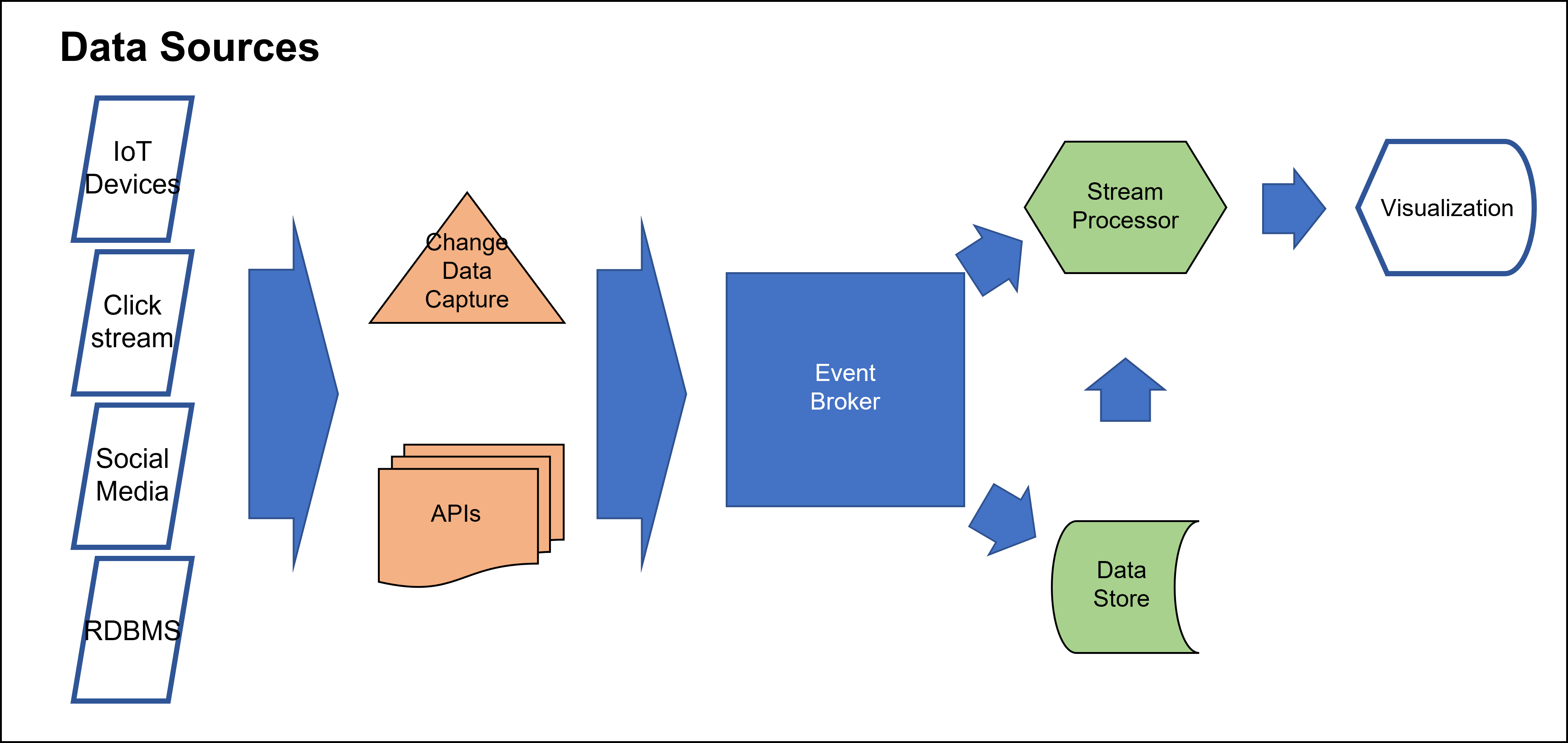

A streaming data architecture is a framework of software components built to ingest and process large volumes of streaming data from multiple sources. The following are components of a streaming, broker-based architecture. These components are available as open source, but commercial versions are available complete with support.

Data Producers: These are the sources of data. Streaming data refers to data that is continuously generated, usually in high volumes and at high velocity. A streaming data source would typically consist of a stream of logs that record events as they happen like users interacting with a website or real time data from a machine.

Change Data Capture: a data ingestion pattern to track when and what changes occur in data then alert other systems and services that must respond to those changes.

APIs: programming interfaces to facilitate exchange between processes and applications. Beyond web centric and RESTful interfaces, streaming interfaces facilitate high data rates necessary in streaming environments.

Event Broker: middleware software, appliance or SaaS used to transmit events between event producers and consumers in a publish-subscribe pattern.

Stream processor: ingestion of a continuous data stream to quickly analyze, filter, transform or enhance the data in real time. Once processed, the data is passed off to an application, data store or another stream processing engine.

Data Store: allows correlation of real-time data with historical records from a data store such as a data lake. Whether these repositories receive their feeds from an event broker or a batch ETL tool, they often contain data useful to streaming analytics.

Visualization: business sense is made of the data using dashboards and charts. These graphical solutions can help your organizations democratize data and increase data literacy by delivering intuitive graphical results.

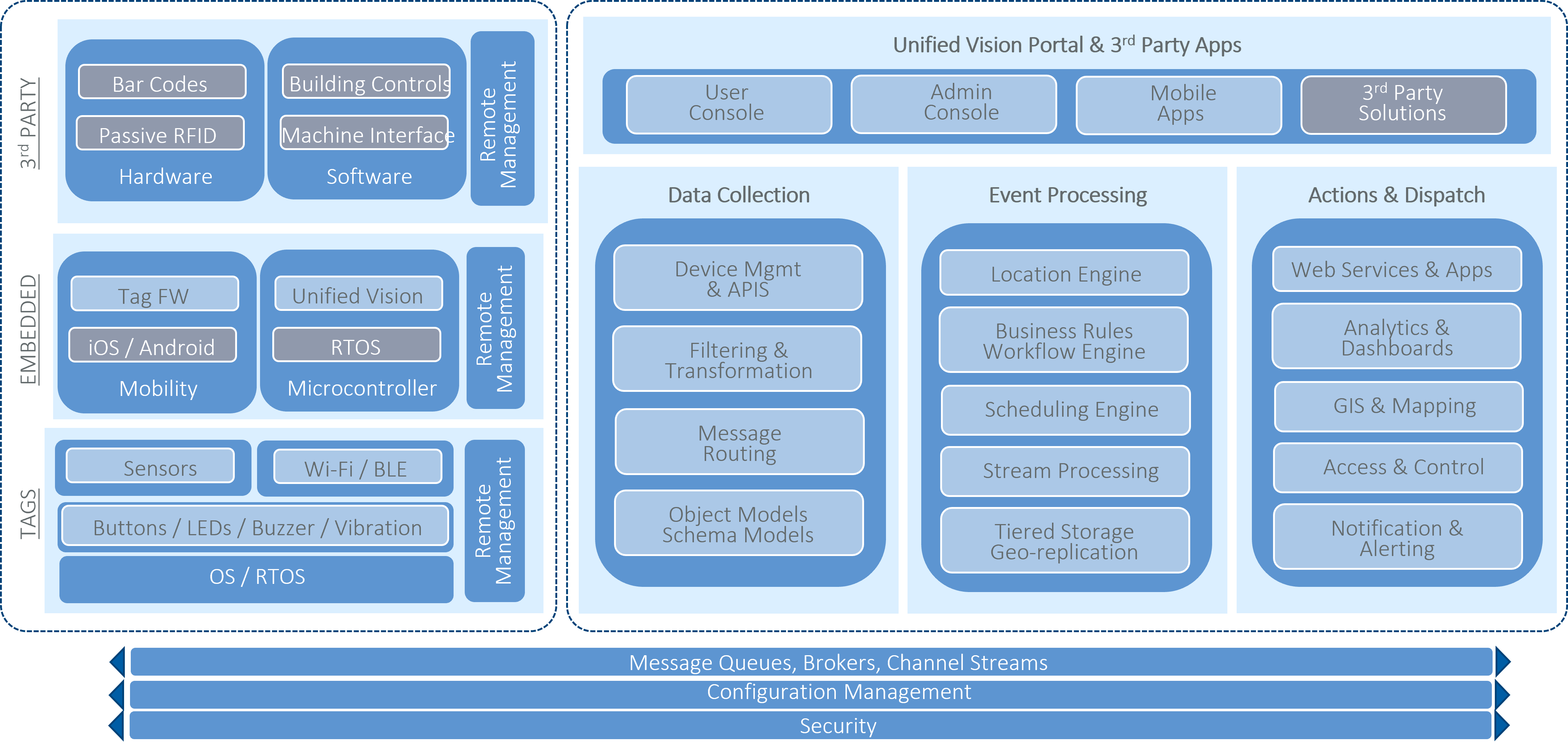

AiRISTA’s Unified Vision Solution Architecture

With over 10 years of field hardening, AiRISTA’s UVS solution platform has been updated to embrace streaming data. Inter-process communication uses brokers which subscribe to specific “topics”. Event brokers are used to inspect to direct data streams to various consumers. Written in the language “Go” (aka Golang), UVS is optimized for distributed network services and cloud-native apps. As a result, UVS can do cool things like;

- HL7 protocol manipulation on the fly (e.g. enable different versions of HL7 to interoperate transparently)

- Consume real time data feeds from wireless infrastructure (e.g. support MQTT data feeds from hundreds of BLE devices scanned by each access point)

- Dynamically steer data between a 3-tier model from onboard processor memory, to platform storage and to data lakes.

- ML ready to combine location information with intra-machine coordination

- AI ready for inspection and reaction to data feeds from streaming devices like video cameras for real time inspection and reaction

UVS makes available popular connectors for data producers such as Kafka, Pulsar, AWS S3, Dropbox, Salesforce, google, azure, etc. UVS also streams data to these popular systems.

Collaboration between IT and the Business Units on Technology Selection

As decisions about technology selection are pushed to the business unit, there is a danger that islands of functionality proliferate and the value of shared insights across departments is an opportunity missed. Architecting a technology platform ready for the IoT world requires IT to create specifications to ensure platforms communicate. As the patient experience spans multiple departments, it is necessary that a technology backbone underpin the technologies deployed by various units. According to Gregg Pessin, “The departmental approach to managing smart devices within an HDO hides the potential enterprisewide benefits of developing an IoT platform approach and implementing a strategy.”

[1] Gartner, “Healthcare Provider’s Unique IoT Challenges Demand a Platform Strategy”, Gregg Pessin, June 26 2019